This post walks through how to create a Data Studio dashboard like the one below using Matomo analytics data.

The components used to put this together include:

- A Matomo installation

- Python

- Big Query

- Data Studio

The code related to this project are in this repository on Github: https://github.com/quincysmiith/matomo_to_bq

Collecting data from Matomo installation

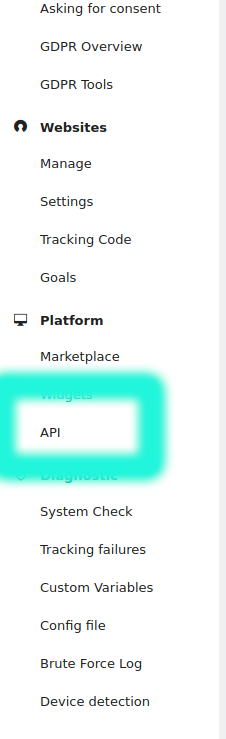

If you need a password to log in and access the data in Matomo then a token will be needed to access the data via the API.

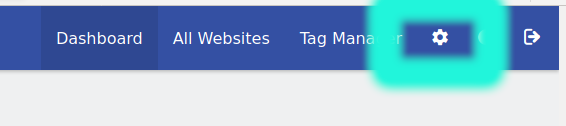

To find your token navigate to the “Admin” section in the Matomo installation.

Then click API in the left hand menu under the Platform section.

On the next screen click on the token to reveal the full value.

With this information it is possible to now access the Analytics data using python.

Data from the Matomo API can be collected by hitting the endpoint with the appropriately formatted url.

The Matomo documentation describes the possibilities for reporting with the API and the various settings.

For a report of basic metrics over the last 30 days, the URL string can be composed as follows:

# Build url string

base_url = 'https://marquinsmith.com/piwik/piwik?module=API'

site_num = '&idSite=1'

return_format = '&format=json'

period = '&period=day'

date_range = '&date=last30'

method = '&method=VisitsSummary.get'

token_string = "&token_auth=" + token

my_url = base_url + site_num + return_format + period + date_range + method + token_string

This will create a URL of the following form

https://marquinsmith.com/piwik/piwik?module=API&idSite=1&format=json&period=day&date=last30&method=VisitsSummary.get&token_auth=abcd****The entire function that builds the URL and collects the data is described below:

import json

import requests

import pandas as pd

from dotenv import load_dotenv

def get_basic_metrics():

"""

Collects basic metrics from Matomo installation on Marquinsmith.com

and returns a pandas dataframe

"""

token = os.getenv("TOKEN")

# Build url string

base_url = 'https://marquinsmith.com/piwik/piwik?module=API'

site_num = '&idSite=1'

return_format = '&format=json'

period = '&period=day'

date_range = '&date=last30'

method = '&method=VisitsSummary.get'

token_string = "&token_auth=" + token

my_url = base_url + site_num + return_format + period + date_range + method + token_string

# send request for report

r = requests.get(my_url)

# parse and tidy collected data

data = pd.DataFrame(r.json()).T

data = data.reset_index()

data.columns = [

"date",

"uniq_visitors",

"users",

"visits",

"actions",

"visits_converted",

"bounces",

"sum_visit_length",

"max_actions",

"bounce_rate",

"actions_per_visit",

"avg_time_on_site",

]

return data

Pushing data into Big Query

To send data into Big Query the right credentials and authorisation is needed, as well as a project in Google Cloud Platform to use.

For more details on how to set this up see this post: https://marquinsmith.com/2020/03/15/interacting-with-big-query-using-pandas/

Once the data is collected from Matomo this can be uploaded to Big Query using the below function.

import os

import pandas as pd

from google.oauth2 import service_account

def upload_to_bq(a_dataframe):

"""

load dataframe into Big Query.

Specifically the basicmetrics table.

"""

service_account_json = os.getenv("SERVICE_ACCOUNT_JSON")

project_id = os.getenv("PROJECT_ID")

# create Google credentials from service account json file

credentials = service_account.Credentials.from_service_account_file(

service_account_json,

)

a_dataframe.to_gbq(

"marquinsmith_dot_com.basicmetrics",

project_id=project_id,

if_exists="replace",

credentials=credentials,

)

return None

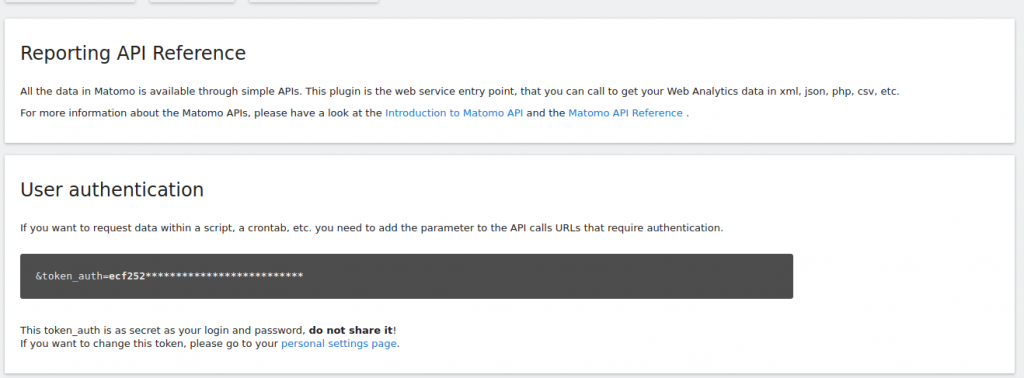

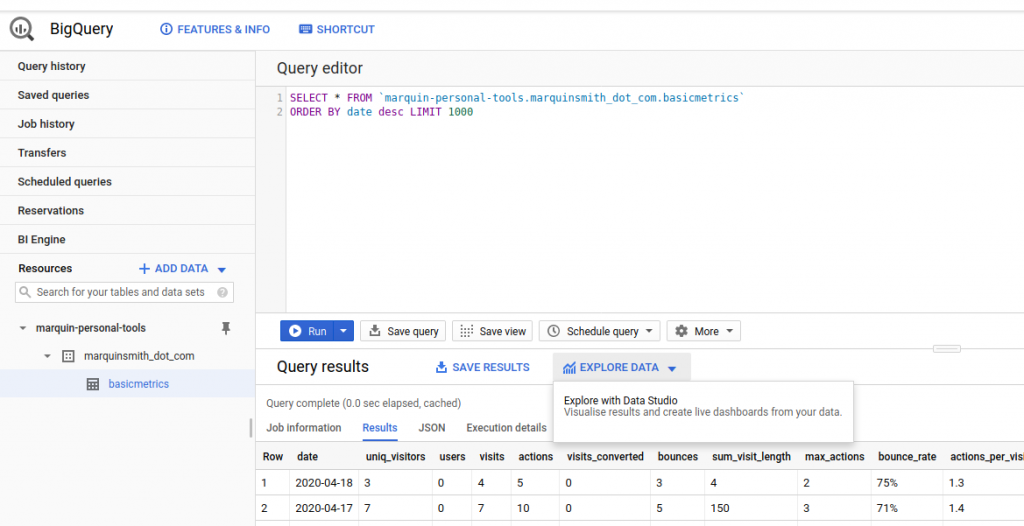

After successfully running the upload to Big query, the data will be able to be interacted with using the Big Query interface.

Connecting Google Data Studio with Big Query

Data Studio and Big Query are both Google products. The benefit of this is that they work very well together.

To start building a data studio report using this data is as simple as clicking “EXPLORE DATA”, then selecting the “Explore with Data Studio” option.

This will open a new Data Studio report that is connected to the data.