Previously we looked at getting some interesting pieces of information from an article. This included data such as:

- Article Title

- Article Publish date

- Text of the article

- Keywords in the article

- and a NLP (Natural Language Processing) generated summary of the article

Now we are going to expand that out a by getting the data for a number of articles and saving this information to disk locally.

In a text file there is a list of URLs that I want to extract some information out of.

https://www.foxsports.com.au/nrl/nrl-premiership/teams/sharks/james-maloneys-shock-confession-over-relationship-with-shane-flanagan/news-story/7134a366ebb93358cb23a2291ff78409

https://www.foxsports.com.au/nrl/nrl-premiership/teams/cowboys/michael-morgan-reveals-chances-of-nrl-return-for-cowboys-in-round-2/news-story/b05717e27eae7b4b9e37600f52bc6d27

https://www.foxsports.com.au/nrl/nrl-premiership/round-2-nrl-late-mail-michael-morgan-races-the-clock-valentine-holmes-fullback-switch/news-story/7aa197f891d0040e4cbf40379f8d008c

https://www.foxsports.com.au/football/socceroos/bert-van-marwijk-trims-socceroos-squad-for-friendlies-with-norway-and-colombia/news-story/2fc3aca788a999cf5db95d7d5cc9a027

https://www.foxsports.com.au/football/premier-league/teams/manchester-united/romelu-lukaku-accuses-manchester-united-colleagues-of-hiding-in-loss-to-sevilla/news-story/27594ec0847505167c5a4edab34bff0a

https://www.foxsports.com.au/football/uefa-champions-league/joes-mourinho-ive-sat-in-this-chair-twice-before-with-porto-with-real-madrid/news-story/2f09690c42efefe58405356c3148f01b

https://www.foxsports.com.au/football/a-league/aleague-hour-on-fox-sports-mark-bosnichs-indepth-expansion-plan-to-fix-the-aleague/news-story/ecbb2ded5a8e105b58e435f6de92ed8d

https://www.foxsports.com.au/football/asian-champions-league/live-asian-champions-league-melbourne-victory-v-kawasaki-frontale/news-story/51a78d57a1e600f239d9a2effffbdd66

https://www.foxsports.com.au/football/socceroos/massimo-luongos-qpr-recorded-a-shock-31-win-at-mile-jedinaks-promotion-hopefuls-aston-villa/news-story/2e8e0eccac5ab8595a9f02e76cf6dec0

https://www.foxsports.com.au/football/asian-champions-league/sydney-fc-cannot-afford-another-loss-in-the-asian-champions-league-when-they-play-kashima-antlers/news-story/99e01e4b4baf375135432d801faee669

The following snippet gets the contents of the text file as a list and defines a function to extract information from the URLs.

# Extract urls from text file

url_list_file = open("crawl_list.txt", "r")

crawlList = [line for line in url_list_file]

crawlList = [url.replace('n', '') for url in crawlList]

print(crawlList)

# helper function to extract information from a given article

def ArticleDataExtractor(some_url):

'''function to pull ou all key information from a given article url'''

from newspaper import Article

output = {}

article = Article(some_url)

article.download()

article.parse()

output['url'] = some_url

output['authors'] = article.authors

output['pubDate'] = str(article.publish_date)

output['title'] = article.title

output['text'] = article.text

# do some NLP

article.nlp()

output['keywords'] = article.keywords

output['summary'] = article.summary

return output

The next issue is in what format and how to save the extracted information for each article. For this example the json file type seems most appropriate for this.

# save files in json format

import json

for url in crawlList:

articleID = url.split("/")[-1]

extractedData = ArticleDataExtractor(url)

my_filename = 'data/' + str(articleID) + '.json'

with open(my_filename, 'w') as fp:

json.dump(extractedData, fp, indent = 4)

After running the above snippet there is now a series of json files in a folder called data.

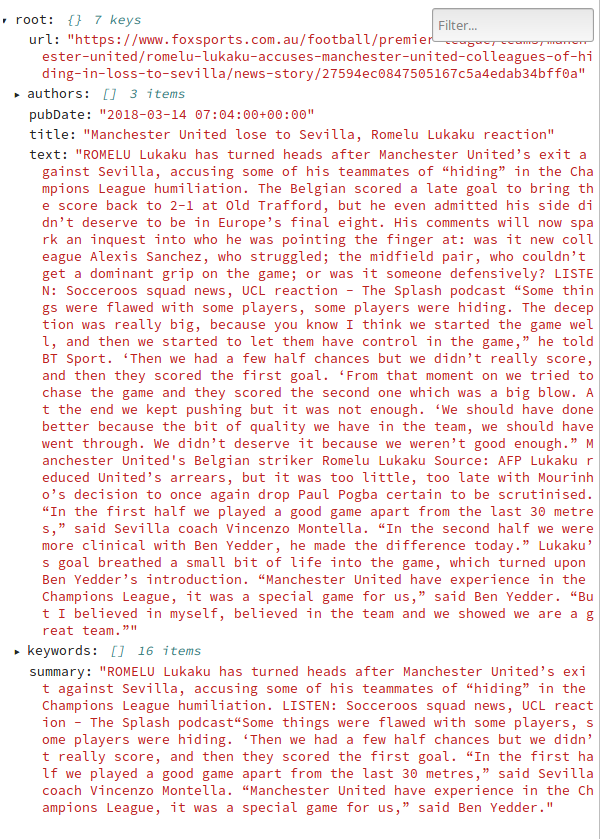

Inspecting one of the json files shows that all the information extracted is in there as expected.

We now have a utility for systematically processing a list of article URLs, extracting some data out of them and saving them to disk for us to access later.

A potential extension to this mini project would be to add some sort of crawler functionality to populate the list of articles. That way we could keep a comprehensive data set about all articles a particular outlet publishes. Alternatively some data analysis could be performed to find the most talked about topics, or even some machine learning to find which articles are most similar to each other through clustering.