In this part of the series we will walkthrough how to set up a query to run at a regular interval using Python and Cloud Functions.

This is useful particularly when there is a need for some complex logic around parameters in the query are needed.

Check Part 1 for how to use the scheduling feature within BigQuery to run queries regularly.

Set up the google cloud function

Navigate to the Google Cloud Functions page in Google Cloud Platform

Click the Create new function button

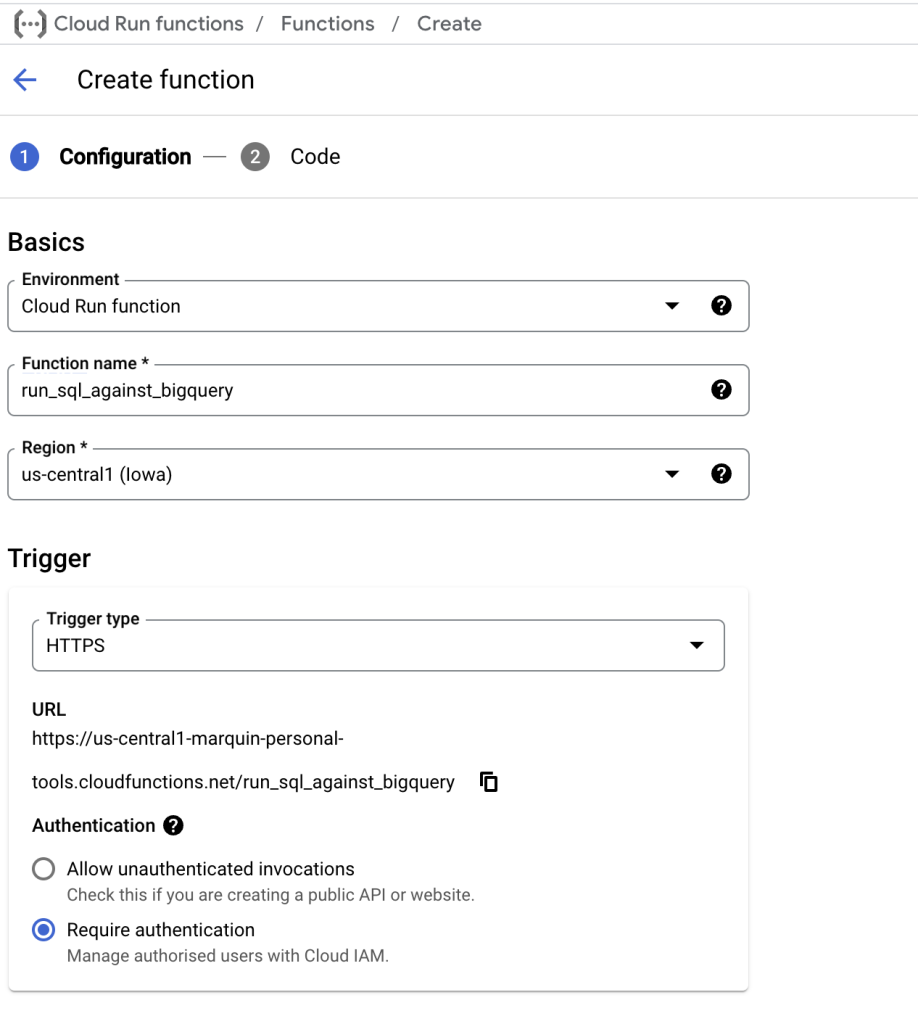

Set cloud function properites

Choose Cloud Run Function, give it a sensible name and set the same region as where the dataset lives that you will be querying.

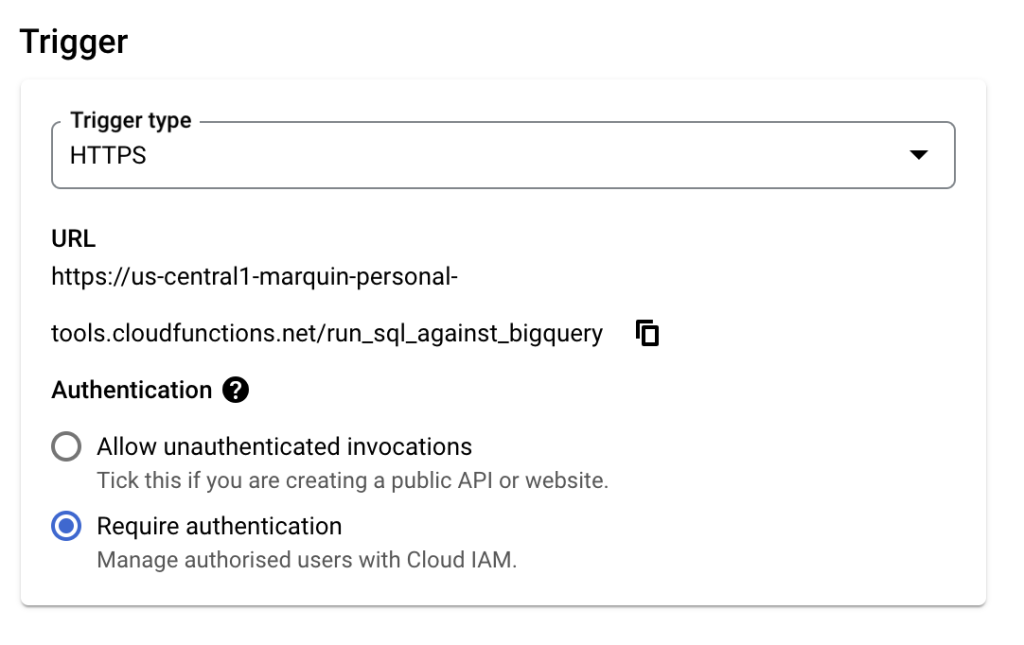

The trigger should be HTTPS and require authentication. Later cloud scheduler can be set up to hit the endpoint in an authenticated manner to trigger the cloud function.

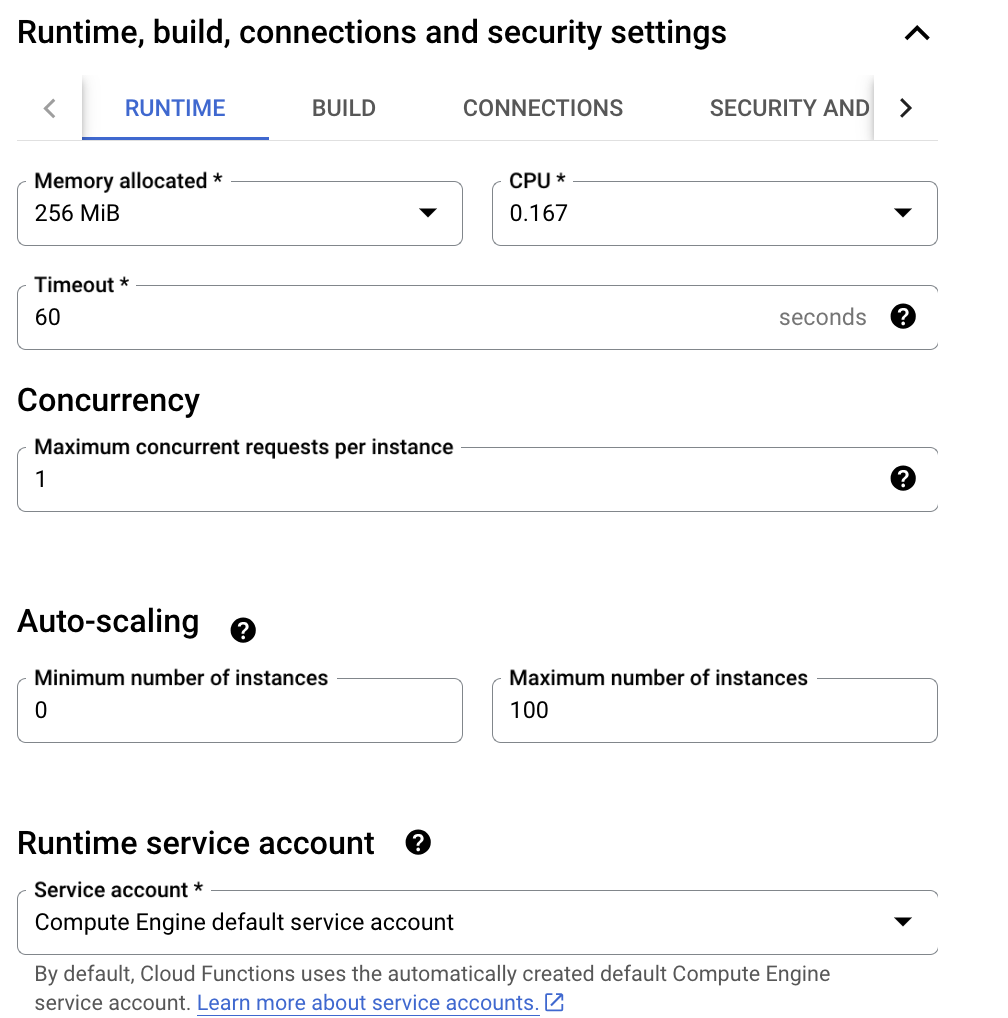

Other default settings will be fine to just execute a query.

If the use case is to do something after the query (eg save to csv, notify on slack) then the cloud function will need to wait until the query is finished so the Timeout field may need to be extended if the query takes longer than 60 seconds.

Click “Next” button

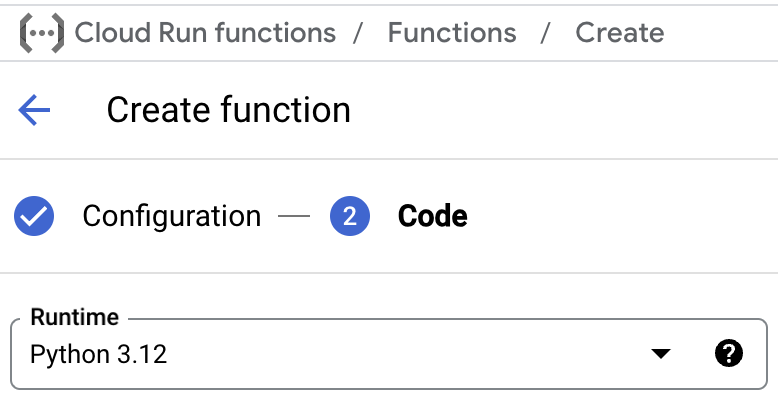

On the cloud function code screen select the python language

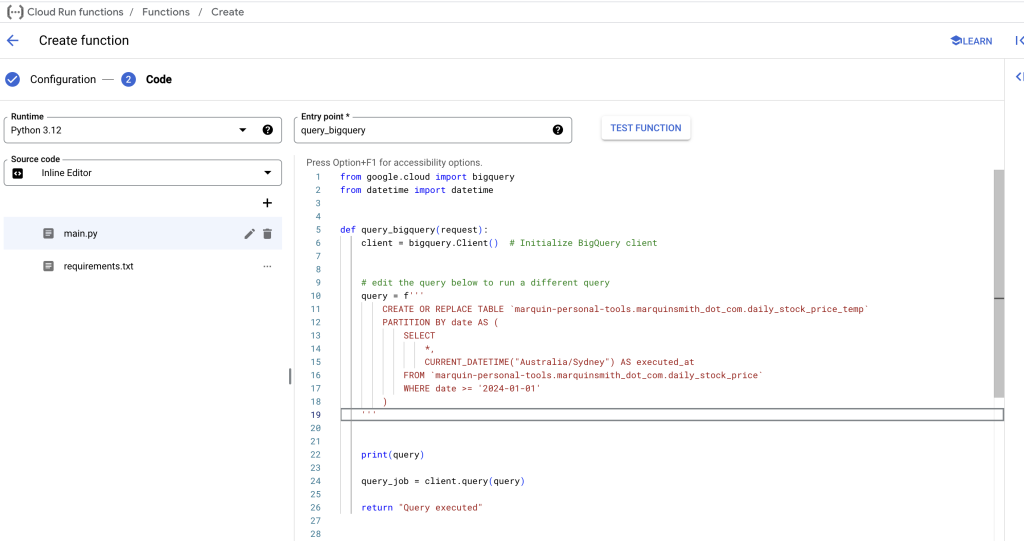

Input the following code snippets into main.py and requirements.txt respectively.

Replace the SQL query string on line 10 of main.py with the SQL code to be executed on a schedule.

main.py

from google.cloud import bigquery

from datetime import datetime

def query_bigquery(request):

client = bigquery.Client() # Initialize BigQuery client

# edit the query below to run a different query

query = f'''

CREATE OR REPLACE TABLE `project.dataset.table2024`

PARTITION BY DATE(date_time) AS (

SELECT

*

FROM `project.dataset.table2`

WHERE date >= '2024-01-01'

)

'''

print(query)

query_job = client.query(query)

return "Query executed"

requirements.txt

google-cloud-bigquery

The “Entry point” input box should contain the name of the function to run when the cloud function is called. Eg. “query_bigquery”

Click “DEPLOY” to make the cloud function live.

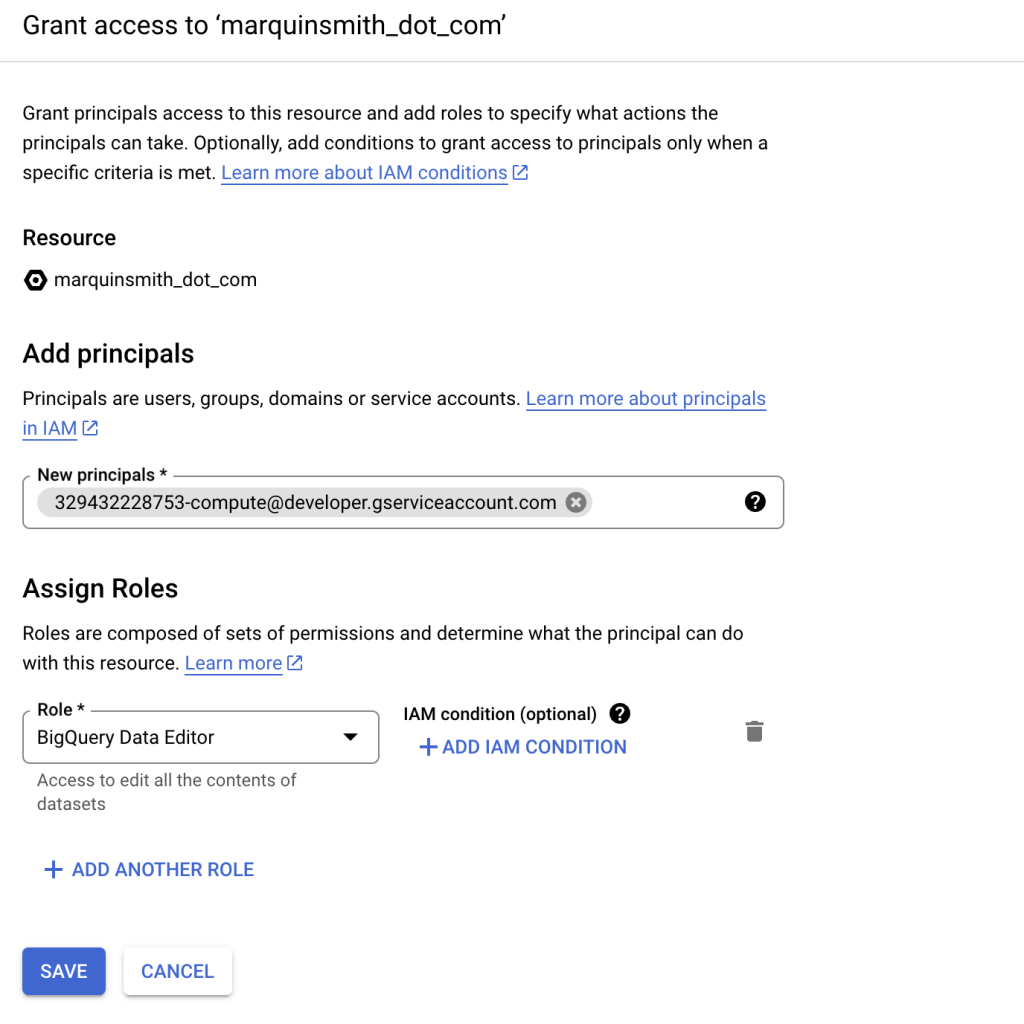

Set up BigQuery permissions for service account used

The Cloud Function uses the permissions of the service account set in the security settings when the cloud function was created.

This service account must have the appropriate permissions in BigQuery for the query to be able to run successfully. Viewer access on the table that is being read from, and Editor access on the table or dataset that is going to be modified by the query.

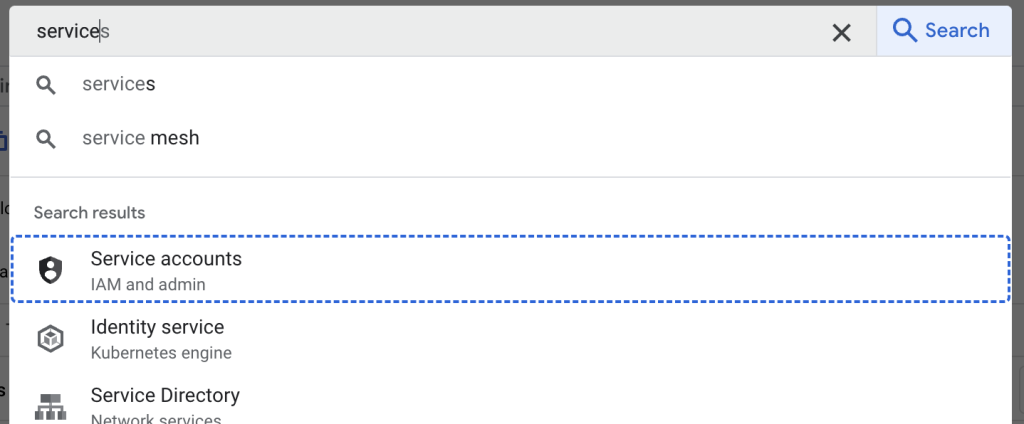

To locate the service account email, navigate to service accounts by searching for “service” in the search bar

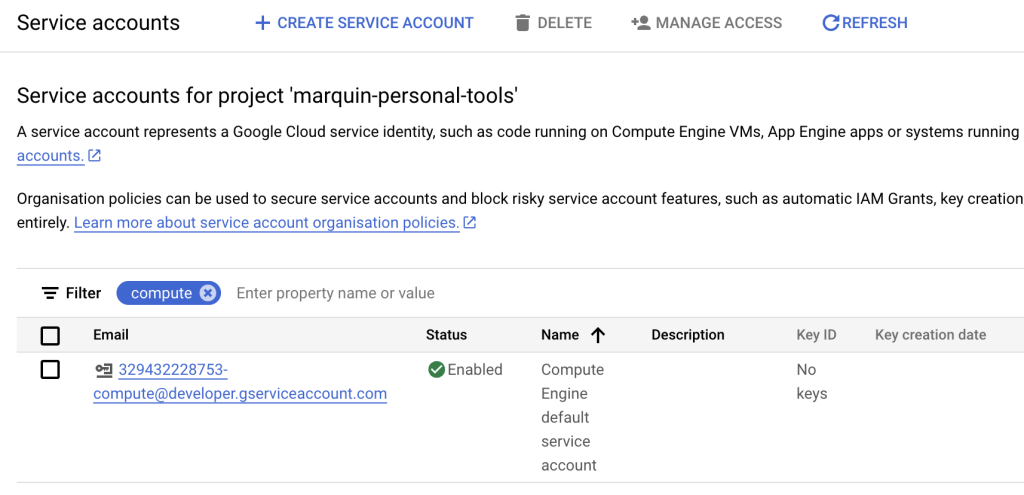

On the service account page there will be a list of service accounts and their email address

Copy the email address and head over to BigQuery.

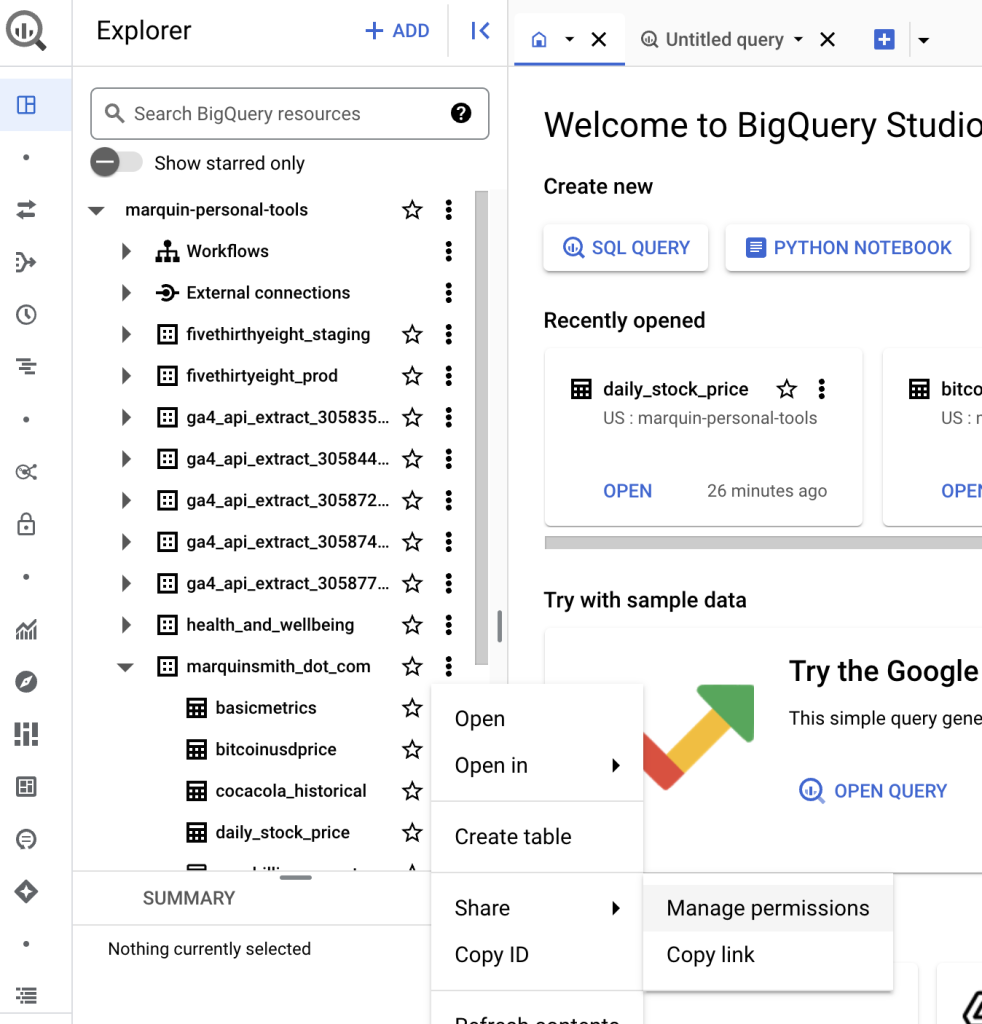

In BigQuery, for the dataset to give access to, click the 3 vertical dots to open the menu. Then “Share”, then click “Manage Permissions”

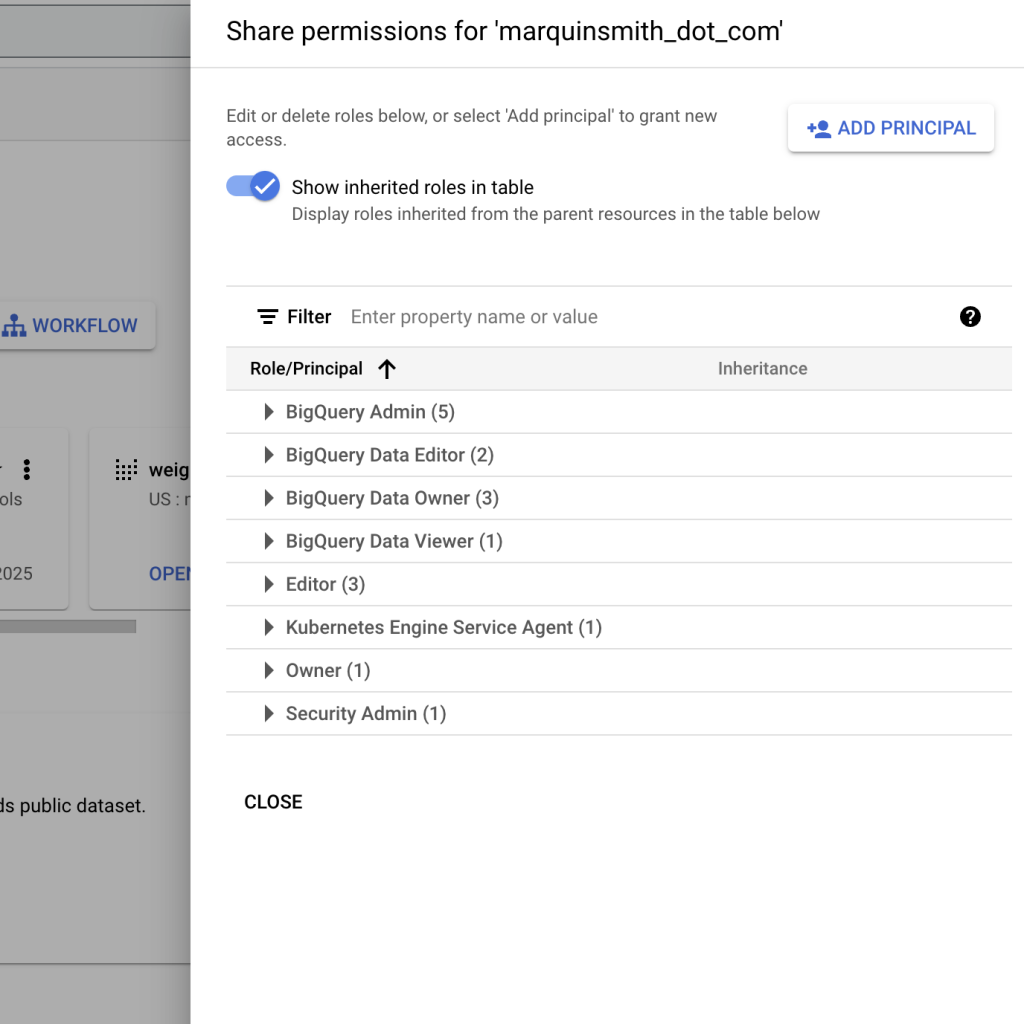

In the menu that appears, click “ADD PRINCIPLE”

Add in the service account email, and assign BigQuery Data Editor (or BigQuery Data Viewer) role to enable access to the service account for BigQuery.

Set up schedule for execution of cloud function

Navigate to Cloud Scheduler

Click “CREATE JOB”

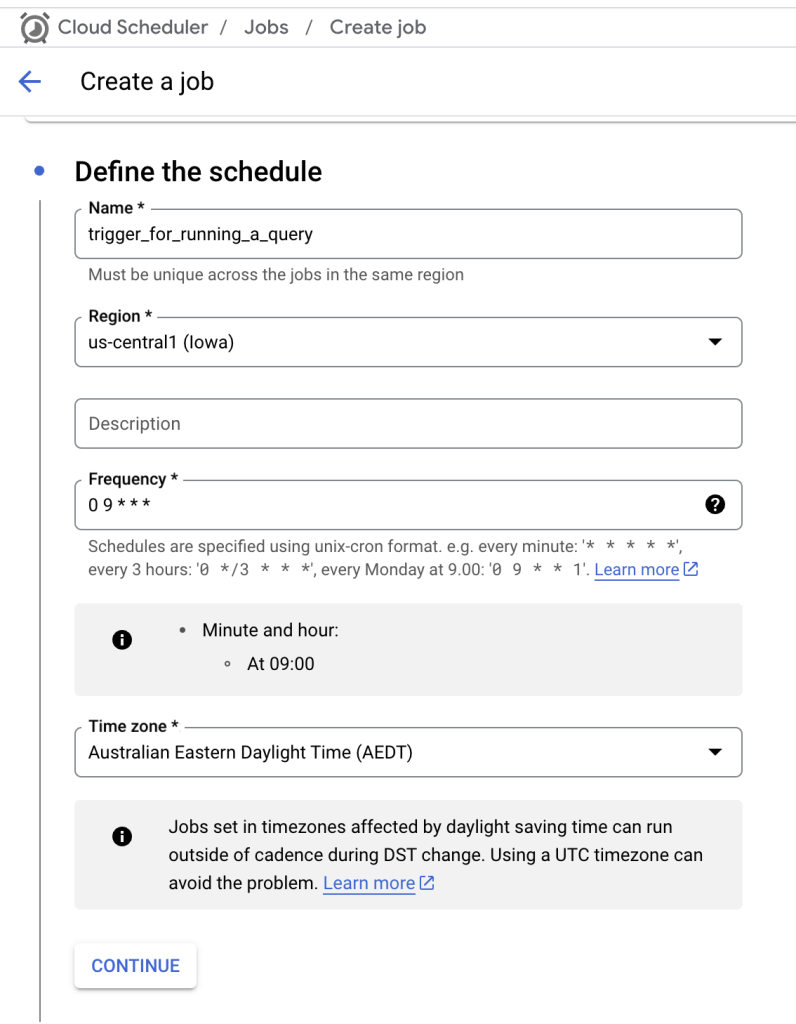

Enter details for the trigger

- Name – The name of the schedule

- Region – The region of the ___

- Description – A description of the schedule

- Frequency – At what schedule this should run

- Timezone – The Timezone for the given frequency. 9am in Australian eastern standard time?

Then click “CONTINUE”

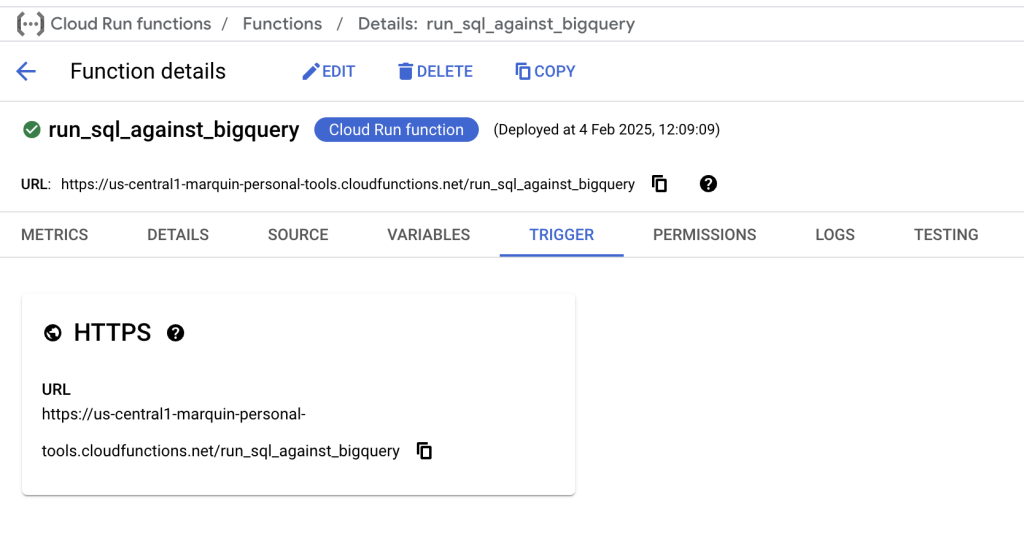

Open the cloud function details in another tab. Copy the URL of the Cloud Function from the Trigger tab of the Cloud Function created earlier

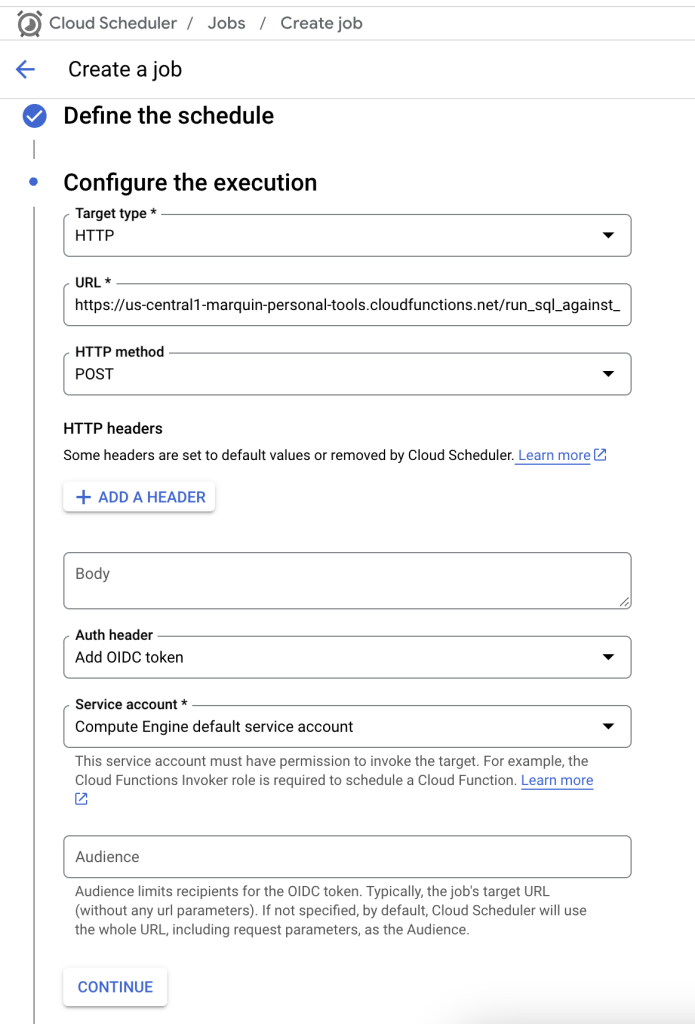

Back to creating the schedule, select “HTTP” as the target type. Input the URL of the cloud function into the URL field.

Select “Add OIDC token” as the Auth header field, and select the same Service account used through the process so far.

The Auth header is needed because previously we selected “Require Authorisation” in the trigger settings for the cloud function. This prevents just anyone from pinging the url to trigger the cloud function.

Click “CONTINUE”

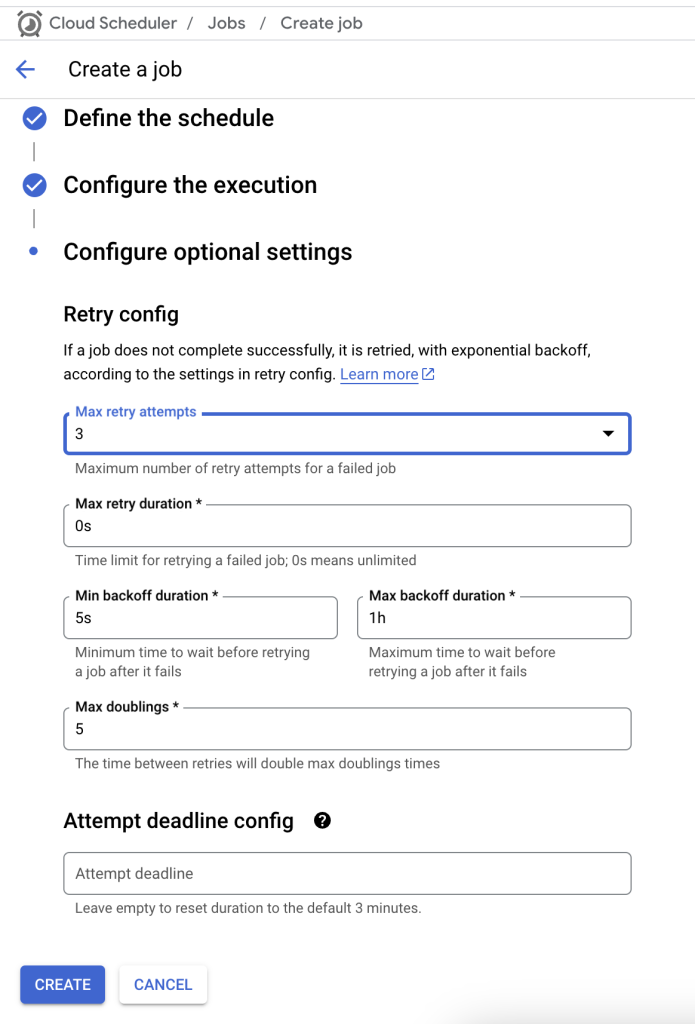

On the optional settings the only thing I change is the max retry attempts. Set this to the number of retries for the scheduler to try the cloud function url. Though this isn’t strictly necessary.

Then click “CREATE”

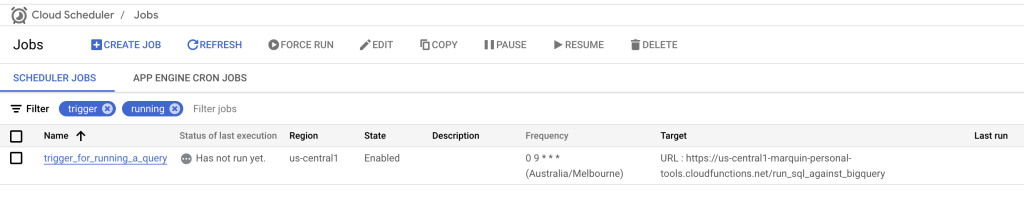

Once the schedule has been created it should look something like this

Success! If everything is working correctly, we have set up a cloud function that will run a python script that executes a query against BigQuery

To test everything works, click the 3 dots on the schedule and click “Force run”. This will trigger the cloud function to execute. Check the Cloud function logs and tables in BigQuery to see if the query ran: